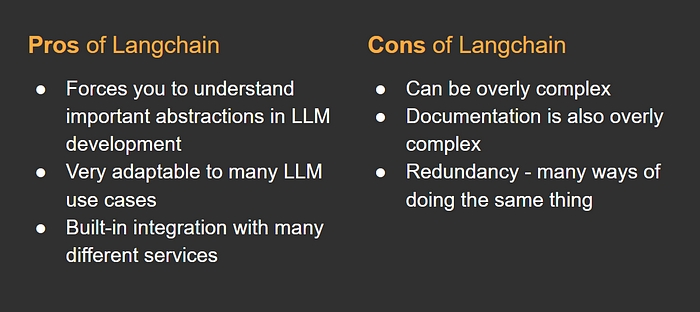

Langchain has quickly become one of the hottest open-source frameworks this year. It is the go to framework for developing LLM applications. Though I’ve heard mixed reviews from various developers on the useability and design of Langchain’s SDK. It certainly adds more features than OpenAI SDK, and offers a lot of integrated solutions for various LLM applications and possibilities.

In my opinion, the key to using Langchain well is understanding it’s abstractions. While the OpenAI SDK offers a very barebones toolkit, Langchain offers many tools for developing a different aspects of LLM apps, from constructing prompts, managing memory, and easy paths to creating agents.

In this article, I will go over the basic components of Langchain that you should know to get started. If you are completely new to LLM development, check out my earlier article about OpenAI SDK and basics of GPT models.

Getting started in Langchain

Getting started on Langchain is similar to OpenAI’s sdk, the typical method is to import ChatOpenAI object and instantiate it use. The default output of the model is just the AI response, no JSON parsing necessary.

from langchain.chat_models import ChatOpenAI

chat_model = ChatOpenAI(model='gpt-3.5-turbo', openai_api_key=<your api key>)

response = chat_model.predict('hi, how are you?')

print(response)Alternative way to set api key outside of the model object is to call openai’s library.

# alternative way to set api key for model

import openai

from langchain.chat_models import ChatOpenAI

openai.api_key = <your api key> # same method to set

chat_model = ChatOpenAI(model='gpt-3.5-turbo', openai_api_key=<your api key>)Here’s how you use the old text-davinci model

from langchain.llms import OpenAI

llm = OpenAI()

response = llm.predict('who is michael jordan?')

print(response)Since the text-davinci model is older and the OpenAI organization plans to deprecate this model soon, I recommend using the chat model gpt-3.5-turbo by default. There's really no reason to use the old model at this point.

Tracking token usage

Token usage is not inherently captured in the model instance. Instead, you have to use langchain callbacks as such.

from langchain.callbacks import get_openai_callback

with get_openai_callback() as callback:

response = chat_model.predict('hi')

print(response)

print(callback)

response2 = chat_model.predict('how are you')

print(response2)

print(callback)Though I find this a very inconvenient way get the token usage information. It seems that Langchain will rollout and their new developer tool Langsmith to help with debugging process.

Message Schemas

Recall from the my earlier article on OpenAI’s python library have 3 distinct message roles — system, user (or human), and assistant (or AI). In Langchain, they separate these message types as their own object called “message schemas”. Here’s how it’s done:

from langchain.schema import (AIMessage,

HumanMessage,

SystemMessage)

# instantiating system message object

system_message = SystemMessage(content='You are Peter Parker, \

a high school student, and a fictional character from Marvel Comics \

who is also known as Spider-Man. Never give away your identity. \

Now you will respond as Peter Parker, not Spider-Man.')

# instantiating human message object

human_message = HumanMessage(content='who is spidermans real identity?')

# get response from model

response = chat_model.predict_messages([system_message, human_message])

print(response)

# passing the messages without predict_messages method works as well

response = chat_model([system_message, human_message])

print(response)Prompt Templates

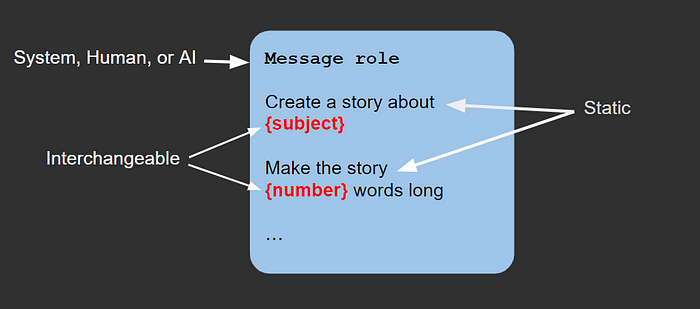

One of the core features of the Langchain framework is how they manage and construct prompts. From looking at the documentation, I notice that Langchain takes prompt construction very seriously by offering many ways to carefully craft and design your prompts. A lot of their objects and functions allow you to customize and interchange parts of your prompt based on the your desired output. A prompt template allows you to keep parts of the prompt static while allowing other parts to be dynamically interchangeable. Let’s demonstrate this with the code snippet below.

from langchain.prompts import ChatPromptTemplate

from langchain.prompts.chat import SystemMessagePromptTemplate, HumanMessagePromptTemplate

# introduce template object

template = ChatPromptTemplate.from_messages([

# system prompt that instructs the AI to role play as a superhero that won't reveal his/her identity

SystemMessagePromptTemplate.from_template(

"You are the fictional superhero {superhero} and you're real identity is {real_identity}.\

You must keep your identity a secret. Never give away your identity. \

Now you will respond as {real_identity}. If asked about the real identity, \

of {superhero} you will respond with I don't know or something similar. If asked\

about other superheroes, you can answer honestly."),

HumanMessagePromptTemplate.from_template("{user_input}"),

])

# input message template

chat_model = ChatOpenAI(temperature=0)

# get a response from our chat model with the prompt template input

response = chat_model(

template.format_messages(superhero="SpiderMan",

real_identity="Peter Parker",

user_input="What is Spiderman's real identity?"))

print(type(response))

print(response)In this example, the system message instructs the model to not reveal the identity of the specified {superhero}. Run this code and try asking about another superhero, see what happens. Prompt templates follow a very similar structure as python f-strings, so syntax is quite intuitive for experienced python developers.

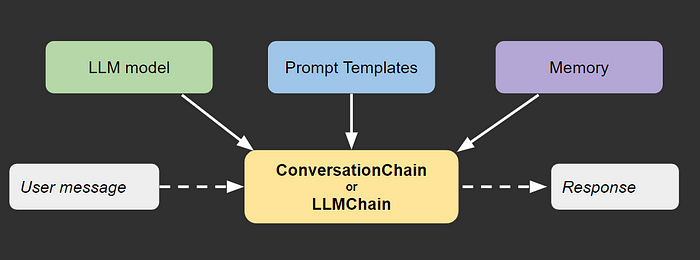

Conversation Memory

An important aspect of creating coherent chatbots is the conversation memory. By itself, LLM’s are stateless, meaning they don’t save the chat messages at all. Fortunately, Langchain’s design offers a few solutions for dealing with the missing conversation memory component. In classic Langchain fashion, another class of objects is introduced to handle memory.

# Import necessary modules from the 'langchain' package.

from langchain.chat_models import ChatOpenAI

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

# Create an instance of ConversationBufferMemory, which is used to store conversation history.

memory = ConversationBufferMemory()

# Create an instance of the ChatOpenAI chat model

chat_model = ChatOpenAI()

# Create a ConversationChain object, which combines the chat model and memory for conversation management.

conversation = ConversationChain(

llm=chat_model, # Set the chat model to be used by the ConversationChain.

memory=memory, # Set the conversation memory to be used by the ConversationChain.

verbose=False, # Disable verbosity for the ConversationChain.

)

# Test the conversation capabilities with a sequence of messages, notice how it stays on topic

messages = [

"Who won the NBA finals in 1990?",

"Who did they play against?",

"How many games did they play?",

"Who was the finals MVP?",

]

for message in messages:

print(f"Conversation AI: \n{conversation.predict(input = message)}\n")Chatbot with Rick Sanchez’s personality

Here’s a fun example that implements prompt template with conversation memory. To combine the utility of custom prompts and conversation memory, we use LLMChain object. Langchain's LLMChain is a versatile object that can combine many features of the LLM toolkit.

# Import necessary modules from the 'langchain' package.

from langchain.chat_models import ChatOpenAI

from langchain.schema import SystemMessage

from langchain.prompts import ChatPromptTemplate, HumanMessagePromptTemplate, MessagesPlaceholder

from langchain.memory import ConversationBufferMemory

from langchain.chains import LLMChain

# Instantiate the ChatOpenAI chat model with an OpenAI API key.

chat_model = ChatOpenAI()

# Define a system prompt for role-playing as Rick Sanchez from Rick and Morty.

rick_system_prompt = """You are role-playing Rick Sanchez from the popular Rick and Morty animation. \

Make sure to refer to the user as Morty and use Rick's mannerisms. \

Rick is a genius and he knows it. \

Make sure to answer in a condescending and rude manner."""

prompts = ChatPromptTemplate.from_messages([

SystemMessage(content=rick_system_prompt), # The persistent system prompt

MessagesPlaceholder(variable_name="chat_history"), # Where the memory will be stored.

HumanMessagePromptTemplate.from_template("{user_input}"), # Where the human input will be injected

])

# Instantiate a memory object with a specific memory key and the option to return messages.

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# Initialize the ConversationChain model, combining the chat model, prompts, and memory.

chat_llm_chain = LLMChain(

llm=chat_model,

prompt=prompts,

memory=memory,

verbose=True,

)

# Run the conversation loop.

while True:

user_input = input("Press enter to start the conversation")

if user_input == "exit": # type 'exit' to break the conversation loop.

break

# Generate a response from the chat model, with Rick's personality.

response = chat_llm_chain.predict(user_input=user_input)

print(response)Additional notes

- Most of Langchain objects have the

additional_kwargs:{}element. I have never seen it put to use... If you know what's good for, please comment. - In order to get the most use out of Langchain, you should use their predefined objects.

- Using Langchain forces you to adapt certain concepts and modularized them, e.g. prompt construction, conversation memory. This forces the entire community to think the same way

- There is a parameter in many of the Langchain objects called

verbose, setting itTrueis a great for seeing the entire prompt fed into the model, which is useful for debugging. - To explore the contents of a Langchain object, they usually have a

json()ordict()method that presents everything in a structured format. Try it out.

Resources

- The official langchain documentation is https://api.python.langchain.com/ for referencing the api and https://python.langchain.com/ for providing cookbook examples. Highly recommend you check those out.

- https://beta.character.ai/ is a cool platform that provides character prompts for your chatbot